I’m not very tech-savvy.

I don’t understand why my computer shuts down randomly or why my iPhone is forever trying to update, but like most people, I know enough to function in the digital world.

What I don’t know much about is encryption and cyber security and all the scary technological details that I tend to leave to the brains at Apple and our “trusty” government.

Looks like it’s time to educate myself.

This week, a federal judge ordered Apple to provide “reasonable technical assistance” to the government in unlocking the iPhone used by Syed Farook, one of the shooters in the San Bernardino massacre.

At first, it seemed like an obvious choice: “Well of course they should unlock the phone.” My patriotism and sentimentality kicked in and it felt like not unlocking the phone was akin to letting the terrorists win. You can’t use American technology to kill American citizens and expect to benefit from a right to privacy.

But then my rational mind took over and I started investigating. What the government is proposing is that Apple create a program that would allow them to keep trying passwords until they find the one that unlocks that phone. Basically, they want Apple to hack their own security system.

Suddenly the issue didn’t seem so obvious.

I wondered about the implications of having a program like this out in the world. How would Apple, or the Federal government, regulate this program once it was in existence? And if they could unlock this one iPhone, what would stop them from unlocking others? Would they unlock the phones of everyone Farook texted or called? What would they do once they were inside those phones?

Where do we draw line? How many people would have their right to privacy revoked if this one iPhone 5C was unlocked for the world?

And of course, I thought about my phone.

My life is on my phone: passwords for my bank account and loans and credit cards; doctor’s appointments and work meetings; private emails and text messages; words I’ve written that I have no intention of sharing; pictures of those I love. What if with one click of a button—a button I have no way of controlling—my information, my life could just become public knowledge.

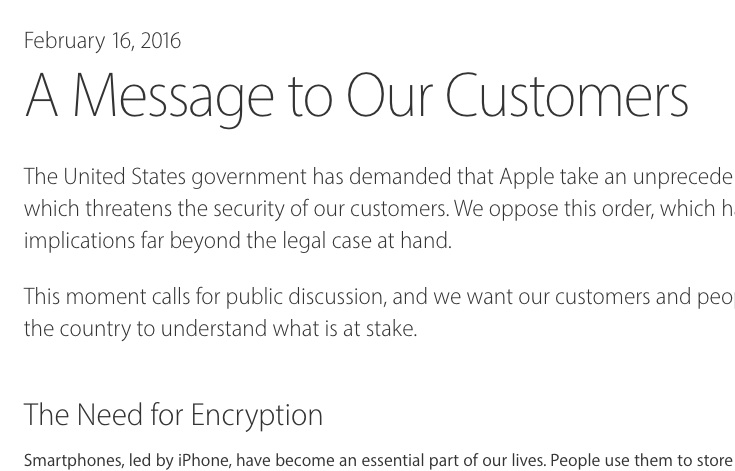

Apple CEO Tim Cook, penned what I felt was an honest and heartfelt letter to customers in response to the judge’s order and stated, in part:

“We have great respect for the professionals at the FBI, and we believe their intentions are good. Up to this point, we have done everything that is both within our power and within the law to help them. But now the U.S. government has asked us for something we simply do not have, and something we consider too dangerous to create. They have asked us to build a backdoor to the iPhone.

Specifically, the FBI wants us to make a new version of the iPhone operating system, circumventing several important security features, and install it on an iPhone recovered during the investigation. In the wrong hands, this software—which does not exist today—would have the potential to unlock any iPhone in someone’s physical possession.

The FBI may use different words to describe this tool, but make no mistake: Building a version of iOS that bypasses security in this way would undeniably create a backdoor. And while the government may argue that its use would be limited to this case, there is no way to guarantee such control.”

Cook also discussed the government’s use of the All Writs Act of 1789, a two-sentence law that states the government “may issue all writs necessary or appropriate in aid of their respective jurisdictions and agreeable to the usages and principles of law.”

“The implications of the government’s demands are chilling. If the government can use the All Writs Act to make it easier to unlock your iPhone, it would have the power to reach into anyone’s device to capture their data. The government could extend this breach of privacy and demand that Apple build surveillance software to intercept your messages, access your health records or financial data, track your location, or even access your phone’s microphone or camera without your knowledge.

Opposing this order is not something we take lightly. We feel we must speak up in the face of what we see as an overreach by the U.S. government.

We are challenging the FBI’s demands with the deepest respect for American democracy and a love of our country. We believe it would be in the best interest of everyone to step back and consider the implications.”

(Read the letter here.)

I don’t know what the right answer is. I don’t know if unlocking this one iPhone will give us the information we need to bring down the terrorists or bring justice to the families who lost someone that day in San Bernardino. I don’t know if creating a “backdoor” to the iPhone will lead to our private information eventually becoming public.

It’s a tricky situation. But what I keep wondering is, when it comes to privacy and security, where do we draw the line? When is enough enough? Will there ever be a time where we can stop worrying about these things?

When do we actually get to feel secure?

Author: Nicole Cameron

Editor: Renée Picard

Image: Apple Customer Letter screenshot

Read 2 comments and reply